The Intelligence You've Stopped Noticing

Aditya Kachhawa

Your alarm goes off at 6:47 AM not 6:45, not 7:00. The coffee maker starts brewing three minutes later. By the time you shuffle into the kitchen, half-awake, your drink is ready at the exact temperature you prefer, though you never programmed any of this. The system learned. It watched. It adjusted.

You don't think about this anymore. You just drink the coffee.

This is what ambient intelligence looks like: technology that operates so seamlessly in the background that you forget it's making decisions at all. And if you haven't thought about it lately, that's exactly the point.

The Exhaustion Economy

We make roughly 35,000 decisions a day, according to researchers who study cognitive load. Most are trivial. Should I leave now or in five minutes? Is this email urgent? What should I have for lunch? Do I need an umbrella?

Individually, these choices cost almost nothing. Collectively, they're exhausting.

Ambient AI emerged as a response to this fatigue. It takes the small, repetitive decisions off your plate so you can focus on what actually matters or at least, that's the promise. Your phone predicts the next word before you type it. Your calendar blocks travel time before meetings. Your music app queues songs based on your mood at 3 PM on Wednesdays.

The core bargain: give up conscious control of trivial decisions in exchange for mental space.

These systems don't wait for instructions. They watch patterns, make inferences, and act. The intelligence isn't conversational. It's environmental.

And here's what makes it work: you stop checking. Last week, did you verify that your spam filter was making good decisions? Did you question why your navigation app chose that particular route? Probably not. The system earned your trust by being right often enough that you stopped paying attention.

How Invisibility Became the Product

For decades, technology companies competed on features. More buttons. More options. More control. Then something shifted: they realized people didn't want more control they wanted less friction.

The best technology, it turned out, was technology you stopped noticing.

A spam filter doesn't ask permission before sorting your inbox. Navigation apps reroute you automatically when traffic appears. Smart thermostats learn your schedule and adjust temperatures without consultation. The interface isn't a dashboard you visit it's the absence of having to think about the problem at all.

Technology wins not by being powerful, but by disappearing entirely.

This works because humans are extraordinarily good at outsourcing cognitive tasks once we trust the system. We stopped double-checking our spell-checkers. We stopped questioning autocorrect. We stopped verifying that our photo albums really did group pictures by face and location. The technology became furniture reliable, invisible, assumed.

Consider your streaming service. When was the last time you actively chose what to watch? More likely, you scrolled through recommendations, picked something that looked "good enough," and moved on. The system didn't just suggest content it shaped the boundaries of what you considered. The shows you never saw weren't rejected. They simply never appeared.

But invisibility comes with a cost.

The Geography of Trust

In India, ambient intelligence is playing out differently than in the West, shaped by infrastructure gaps and cost sensitivity. Smart systems here often solve problems of scarcity rather than convenience.

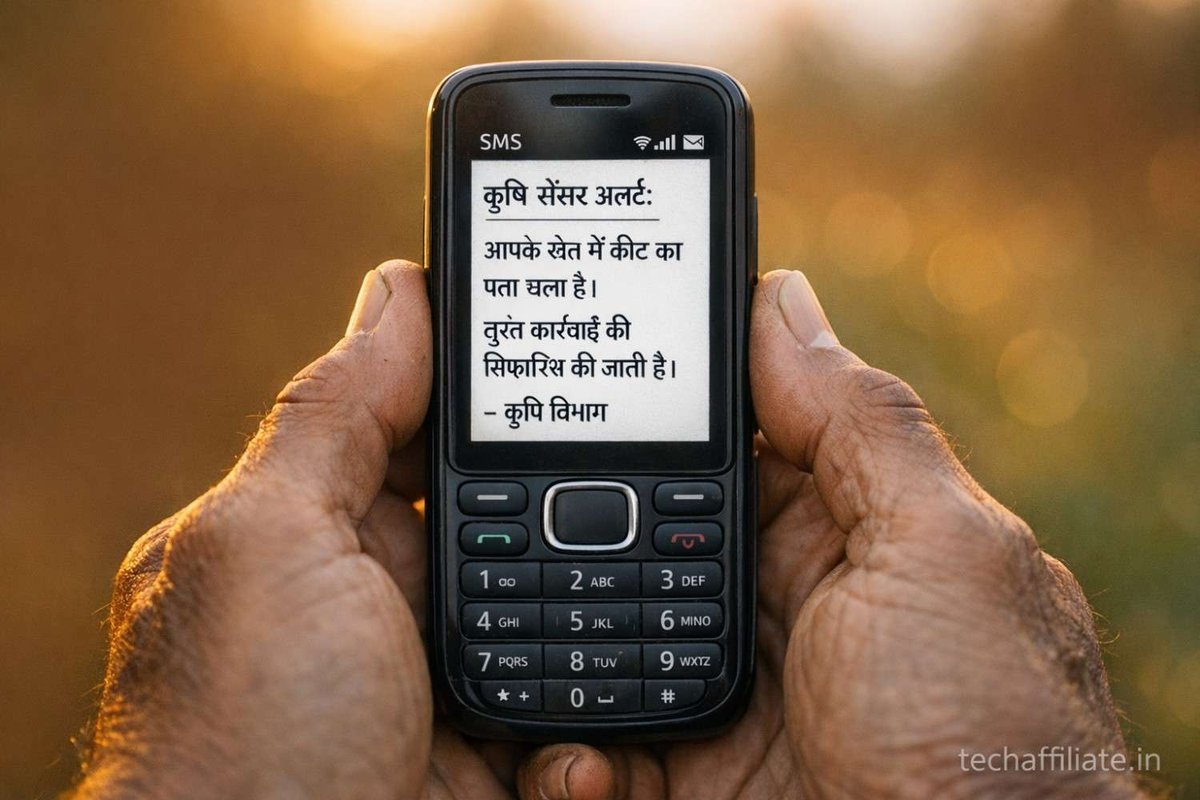

Agricultural sensors predict pest outbreaks and send SMS alerts to farmers who may not own smartphones. Smart meters manage electricity loads to prevent blackouts in grids that can't afford waste. Payment systems authenticate users through behavioral patterns because passwords add friction in markets where every second of transaction time matters.

The intelligence here isn't about lifestyle optimization, it's about making essential systems work at scale. When a billion people need infrastructure that adapts in real-time, ambient AI isn't a luxury. It's a practical necessity.

In Mumbai's dense urban corridors, delivery platforms route drivers through traffic using real-time data most riders never see. The system predicts demand surges before they happen, adjusting prices and driver availability in the background. You open the app, your food arrives in 30 minutes, and you never know about the dozens of micro-optimizations that made it possible.

This reveals something important: ambient intelligence takes the shape of local needs. In dense cities, it manages scarcity. In logistics networks, it routes around bottlenecks. In healthcare systems stretched thin, it triages silently in the background.

The common thread is that it works best when it solves problems people are too busy to monitor themselves.

The Opacity Tax

Here's the problem: systems that work in the background are nearly impossible to audit. When your email filter misclassifies something important, you might never know. When your job application gets screened out by an automated system, there's no notification. When your navigation app routes you away from certain neighbourhoods, the choice is invisible.

Convenience demands a price: you lose visibility into how decisions are being made on your behalf.

These aren't theoretical concerns. They're happening constantly, quietly shaping the boundaries of your experience without announcement.

You can't question decisions you don't know are being made. You can't appeal to systems designed to never interrupt you. This is the opacity tax the price of convenience is a loss of visibility into how choices are being made on your behalf.

The risk isn't primarily about privacy violations, though those exist. It's about drift. Small automated adjustments accumulate over time. Your music taste narrows slightly. Your content feed becomes more predictable. Your routes through the city follow similar patterns. None of this feels like a loss because you didn't consciously choose the alternative. But the landscape of your options quietly reshapes itself according to patterns you never explicitly endorsed.

Think about your music streaming app. When was the last time it genuinely surprised you? Not with a new song from an artist you already know, but with something completely outside your established patterns? The algorithm optimizes for engagement keeping you listening not for discovery or growth. Over months, your musical world contracts without you noticing. You're not unhappy with the choices. You're just... contained.

This is drift in action. Not dramatic. Not painful. Just a slow narrowing of possibility.

What Comes After Invisibility

We're not going back to manual control of every trivial decision. The cognitive load is real, and ambient systems genuinely solve it. But perhaps the future isn't making these systems more invisible it's developing ways to occasionally make them visible again.

Think of it like a nutrition label. Most of the time, you don't read it. But it's there when you want to check. What if ambient systems offered periodic reports? "This month, your email filter blocked 47 messages. Here are the ones it was least certain about." Not constant interruption, which defeats the purpose, but moments of transparency when you want them.

The solution isn't rejecting automation it's building occasional windows into it.

Some systems are already moving this direction. A few streaming platforms show you why they recommended something. Some navigation apps display alternative routes and their trade-offs. The question is whether this becomes standard practice or remains a gesture most users ignore.

But transparency alone isn't enough. What we need is practical literacy simple habits that help you stay aware without becoming paranoid. Here's what that might look like:

Once a month, ask yourself three questions:

- What decisions did I used to make that now happen automatically?

- When was the last time my recommendations surprised me?

- What would I choose differently if I saw all the options?

You don't need to audit every algorithmic decision. You just need to remember that they're being made. The goal isn't control it's awareness. The difference between drifting and choosing a direction.

Living With the Invisible

The intelligence shaping your daily experience isn't getting louder. It's getting quieter. More embedded. More automatic. More assumed.

This isn't necessarily a crisis, but it does require a kind of awareness we're still developing. The ability to periodically ask: what decisions am I no longer making? What patterns have I delegated? Where has convenience crossed into drift?

The machines you've stopped noticing haven't stopped watching. And the most sophisticated technology in your life might be the technology you've learned to ignore.

Tomorrow morning, your coffee will be ready at 6:47 AM. The temperature will be exactly right. The system will have learned, adjusted, and anticipated your needs perfectly. You'll shuffle into the kitchen, half-awake, and drink it without thinking.

But maybe just for a moment you'll pause. Not to reject the convenience. Not to unplug or go back to manual settings. Just to notice. To remember that you didn't choose this particular time or temperature. The system did. Based on patterns you didn't consciously create.

And that's worth noticing. Even if you go right back to not thinking about it. Because the act of noticing, even occasionally, is what keeps drift from becoming destiny.

The intelligence is still invisible. But now, at least, you remember it's there.

Want to stay aware of what you're no longer noticing? Subscribe to our newsletter for thoughtful explorations of technology, society, and the invisible systems shaping your world. No hype. No buzzwords. Just clarity.

Affiliate Disclosure

TechAffiliate may earn a commission if you purchase through our links. This helps support our work but does not influence our reviews. We always provide honest assessments of all products.

Related Articles

AI & Machine Learning

AI & Machine LearningJan 13, 2026 • 17 min read

AI Wearables in India 2026: The Reality Behind the Hype

AI wearables that record your life and make it searchable are moving from experimental to practical in 2026. But India faces unique challenges: code-switching languages, high costs, and privacy concerns. Here's what actually works and what doesn't.

AI & Machine Learning

AI & Machine LearningDec 11, 2025 • 10 min read

What is Agentic AI? The Technology Replacing Apps in 2026 - Complete India Guide

Agentic AI isn't ChatGPT 2.0... it's something far more powerful. Discover how AI agents are replacing traditional apps in 2026, and why 200,000+ Indian IT professionals are already using this technology at TCS, Infosys, Wipro, and Cognizant. Complete guide for students and professionals.

Cybersecurity

CybersecurityJan 7, 2026 • 17 min read

Why Your Encrypted Data Is Already at Risk (And What's Being Done About It)

Hackers are stealing encrypted data NOW to decrypt with quantum computers in the 2030s. Learn how Post-Quantum Cryptography protects your data today.

Comments (0)

Leave a Comment

No comments yet

Be the first to share your thoughts!